Amid all of the (understandable) fuss that is frequently made about both on-page and off-page search engine optimisation (SEO), it’s easy to forget sometimes that SEO doesn’t always neatly fall into either on-page or off-page categories.

In fact, there’s another category of your site’s SEO that, if you don’t get it right, might pretty much kill your chances of achieving results from the aforementioned two. That category is the vital foundation that is technical SEO.

Now, there’s no reason to feel too intimidated by that word, ‘technical’. It’s basically just referring to the various ‘under-the-hood’ aspects of your site that could impact on its performance, crawlability and indexation – and ultimately, how well it ranks in the search engines.

To assess the health of your site’s technical SEO, though, you’ll need to carry out a website technical audit from time to time. Below, we’ve listed some of the things that such an audit should cover.

Site Security

Now, as much as we’re sure you’ll agree with us that it’s really important for your site to be as secure as possible, you might be wondering what the relevance of it is to SEO. Well, allow us to explain.

There’s a key distinction to be made between sites that use HTTP (Hypertext Transfer Protocol) and those that instead use HTTPS (Hypertext Transfer Protocol Secure). That difference is that the information that flows from server to browser isn’t encrypted when you use HTTP, which means that it could be easily stolen.

HTTPS protocols, by contrast, address this by using a Secure Sockets Layer (SSL) certificate, which helps to create a secure encrypted connection between the server and the browser.

As for the relevance to your site’s SEO, Google has actually specifically said in the past that it gives a minor rankings bump to HTTPS sites over non-HTTPS ones. Plus, users are just more likely to place their trust in a site that they know to be secure, which will help its rankings over time as well.

Tools like WebSite Auditor can be invaluable for enabling you to quickly and easily pick out the non-HTTPS resources on your site as part of the complete website technical audit.

Mobile Friendliness

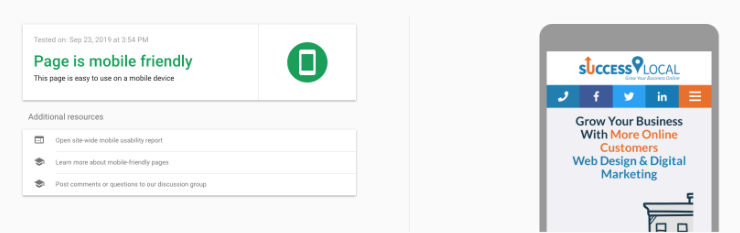

The spring of 2018 saw Google finally begin to migrate sites to mobile-first indexing. What this means, in plain English, is that Google is now indexing the mobile versions of websites instead of the desktop versions. This could have massive implications for your site’s search rankings if you’ve been performing SEO tweaks to your desktop site, while ignoring your mobile site.

Thankfully, Google provides a free tool that enables you to easily check how mobile-friendly your site is. The aforementioned WebSite Auditor, meanwhile, will help you to undertake a more in-depth analysis of your site’s mobile friendliness.

Speed

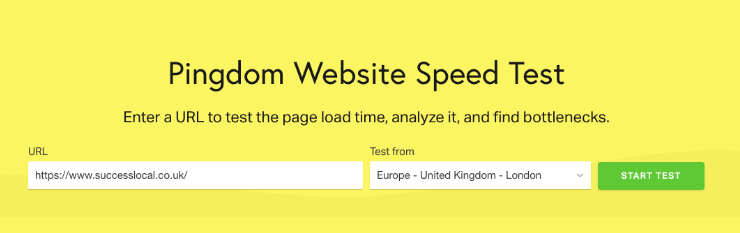

As far back as 2010, Google was telling us that it aimed to rank pages well that loaded in as short a time as half a second or less – and the search engine has also previously officially confirmed speed to be one of its key ranking factors.

Of course, this should be no big surprise, given that the speed at which pages load has a massive impact on the user experience (UX) that a particular site provides, and Google won’t want to give high rankings to your site if it’s slow.

Again, Google has a handy tool for assessing your page speed in the form of PageSpeed Insights, and the Pingdom Tools site allows you to test page speed as well. But more specialised tools are also available for addressing certain specific aspects of your site that may be slowing down its loading times – for example, Cloudinary’s website speed test image analysis tool.

Duplicate Content

Duplicate content is the same content present on several URLs, and while it’s a myth that Google will actively penalise you if your site has a lot of it, it’s still hardly a great thing for your SEO.

After all, you’re unlikely to be looked upon very favourably by human users if you keep providing content on certain pages that can be found elsewhere, word for word. It’s confusing for search engine spiders too, given that they’ll need to determine which of these duplicate pages to rank.

Thankfully, there’s a range of tools out there that can assist you in identifying duplicate content on your own site, ranging from Copyscape and Screaming Frog to Sitebulb and SEMrush.

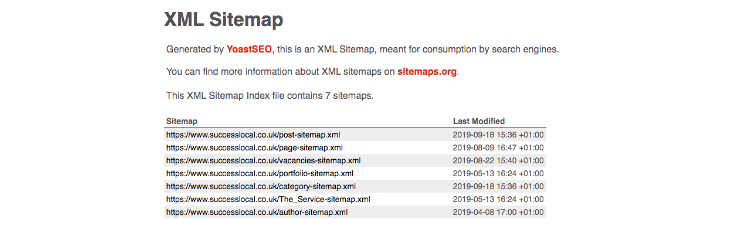

XML Sitemap

If you don’t know the difference between HTML and XML sitemaps, listen up (well, read on). While HTML sitemaps are designed for the convenience of human users trying to easily get around a website, an XML sitemap is very much aimed at the search engines, consisting of a collection of URLs all relating to your domain.

It’s therefore your site’s XML sitemap that you will need to pay particular attention to from an SEO perspective, as it’s this type of sitemap that is supposed to allow search engine spiders to crawl and index the content on your site more efficiently.

Screaming Frog can, again, be greatly helpful for picking out any errors in your XML sitemap as part of your next website technical audit. Simply open Screaming Frog, select ‘List Mode’, grab your sitemap.xml file’s URL, click ‘Start’ to begin crawling, and then sort by ‘Status Code’ to identify any potential problems with the sitemap.

Structured Data Markup

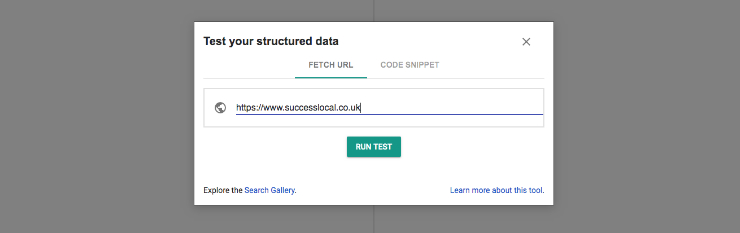

It’s fair to say that structured data markup isn’t exactly one of the best-understood aspects of SEO for many website owners. We’ll therefore quickly discuss what it is, and why it should be a key focus of your website technical audit.

‘Structured data’ is a general term simply referring to any data that is ‘organised’, or given ‘structure’ (so, for example, the equivalent of creating a chart to document that you need to go to Susan’s house at 3pm on Sunday, and then go shopping at 7pm on Monday, rather than simply trying to keep a mental note of these things).

As for what structured data means in an SEO context, it’s usually about implementing some sort of markup on your webpage to provide further detail on the page’s content.

The use of such markup can help search engines to better understand your website’s content and as a result, give your site the benefit of enhanced results in the search engine results pages – think such things as rich snippets, carousels, knowledge boxes and so on.

To read more about how to use structured data markup to bolster your site’s SEO, simply check this guide from Neil Patel. Meanwhile, Google has a Structured Data Testing Tool that you can use to easily review your site’s existing markup.

Google Search Console

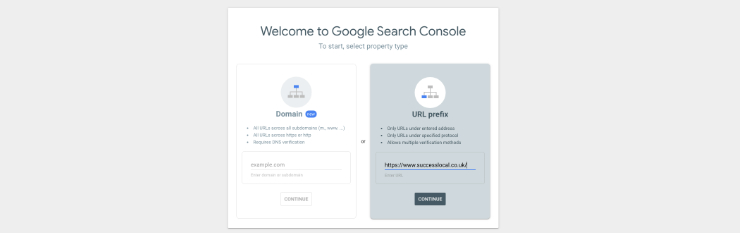

Another invaluable free tool for webmasters, Google Search Console should have a central role in your website technical audit. It enables you to assess whether Google is properly crawling and indexing your site so that you can achieve all of the rankings, traffic and conversions that your site is capable of.

After all, the better understand how your site is performing in Google’s search engine results pages (SERPs) – and whatever crawl errors, indexing issues or sitemap problems may be impacting on this – the sooner you will be able to make the right changes to optimise its performances in the future.

404s & Redirects

Whatever the specific reason for a given 404 error, broken link or redirect – perhaps a page having been moved, or even a mistyped URL – it’s not good for your site’s SEO. It wastes what is known as ‘crawl budget’, which refers, in Google’s words, to “the number of URLs Googlebot can and wants to crawl”. Plus, it adds bumps in the road for the user experience.

It’s therefore really important to be able to identify and fix broken links and redirects on your site – as you can do with Screaming Frog’s SEO Spider.

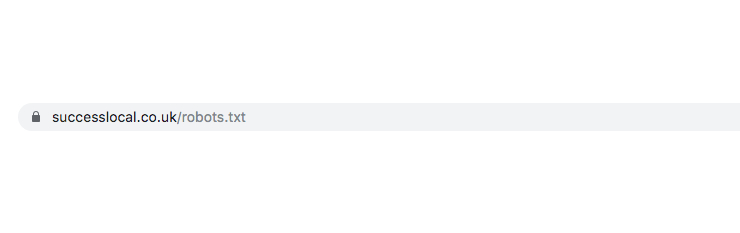

Robots.txt

Checking your robots.txt file is a step well worth making if you’ve noticed that some of your pages aren’t being indexed. It might be the case that you’ve accidentally blocked pages from search engine crawling, as you’ll discover if you look through the robots.txt file and see mentions of “Disallow: /”.

That particular instruction tells search engines not to crawl a specific page on your site, or perhaps even your whole site. It’s therefore vital to ensure you haven’t inadvertently blocked certain pages of your site from even appearing in the SERPs.

Canonicalization

A canonical tag – also known as “rel canonical” – provides a means of telling search engines that a particular URL is supposed to be the ‘master copy’ of that page. This makes it a potentially really helpful tag for avoiding some of the problems that can arise from identical or ‘duplicate’ content appearing across several of your site’s URLs.

Canonicalization is therefore all about pointing out to search engines which version of a page you would like them to rank in their search results. As Moz explains, these tags can be invaluable for ensuring that you don’t unwittingly dilute your site’s ranking ability.

Are all of these elements being checked every time you carry out a website technical audit? Or are you remembering to undertake an audit of your site at all? Whatever the situation, Success Local is here to assist you in getting the best results out of your audits; just call 01788 288 800 to find out more.

Posted in Marketing Mondays, News